Who We Are

We help people to pass their complicated and difficult exams with short cut Exam Braindumps that we collect from professional team of Killexams.com

What We Do

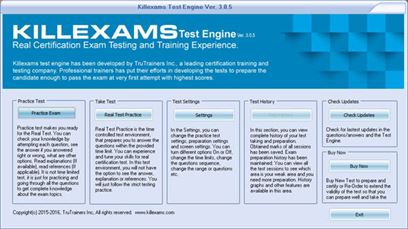

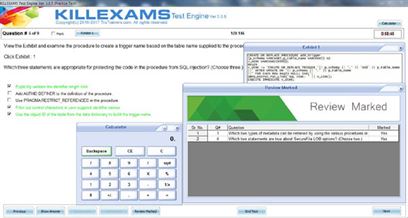

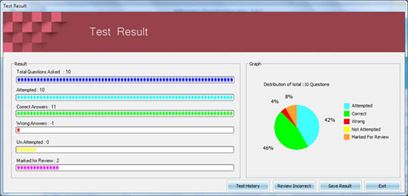

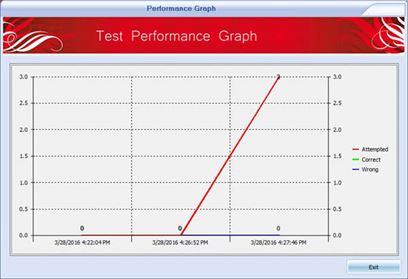

We provide actual questions and answers in Exam Braindumps that we obtain from killexams.com. These Exam Braindumps contains up to date questions and answers that help to pass exam at first attempt. Killexams.com develop Exam Simulator for realistic exam experience. Exam simulator helps to memorize and practice questions and answers. We take premium exams from Killexams.com

Why Choose Us

Exam Braindumps that we provide is updated on regular basis. All the Questions and Answers are verified and corrected by certified professionals. Online test help is provided 24x7 by our certified professionals. Our source of exam questions is killexams.com which is best certification Exam Braindumps provider in the market.